Actual Intelligence + Artificial Intelligence = 🥇🏆🎉

Partner with AI; Don't Defer to it

With the exponential growth in the AI space, and with seeing how quickly AI has grown to become commonplace in the last two years, the rate of change is insanely fast. In that environment, how relevant could a book about AI written 15 years ago be?

That was the question I was asking myself when I sat down to start reading Jaron Lanier's "You Are Not a Gadget"1. My introduction to Jaron (a founder of the field of virtual reality) was in The Social Dilemma2. Then he showed up again in a Cal Newport essay in the New Yorker3. In that essay, Cal mentioned this book, so I decided to buy it to get to know Jaron better. But when I got the book and saw the publish date, I found myself thinking I had chosen poorly.

I am very happy to report that I was wrong to doubt the relevance of Jaron's words. Much like Neil Postman in "Amusing Ourselves to Death"4, Jaron's words are timeless, because they are speaking less about the state of the art at the time and more about the implications that the pursuit of artificial intelligence will have on society.

I found many gems in "You Are Not a Gadget", but the one that stood out to me is, "The Turing Test cuts both ways." Alan Turing, the father of modern computer science, in 1950 wrote a paper titled Computing Machinery and Intelligence. In that paper, he described a test that could properly determine if computer intelligence is discernable from human intelligence. In the paper, he called this the "imitation game." This is now commonly referred to instead as The Turing Test.

The Turing Test involves three participants in isolated rooms: a computer contestant, a human contestant, and a judge. The human judge can converse with both contestants by typing into a terminal. Both contestants try to convince the judge that they are the human. If the judge cannot consistently tell which is which, then the computer wins the game.

Jaron's "cutting both ways" point is that there are two factors that can contribute to the computer winning this game. The first is by the computer becoming more intelligent. The second is by the human becoming less intelligent. Both of these reduce the judge's ability to distinguish between computer and human.

As we continue to rely more and more on technology, there is a widespread dumbing down of society. And now, with the introduction of arguably the most potent technology ever, AI, we are already primed to be very accepting, even deferent, to AI.

Our job in Turing's imitation game is to be the strongest human contestant we can be, to raise the bar for what AI has to demonstrate.

The Grandest Synthesis?

It's easy to be in awe of AI's knowledge base. I talked about each of us being a Grand Synthesis5 of everything that we've been exposed to and internalized into our own story. And when we think about the volume of information that AIs are exposed to in the building of their LLM (Large Language Model), it's easy to conclude that our individual Grand Synthesis pales in comparison.

AI excels in the synthesis of everything that has ever been written down. But it's missing the depth of everything not written down, like common sense and intuition. These we have picked up from a combination of our genes and our observations of the world around us. In an earlier report in 1948, Turing used the word "initiative" for this class of knowledge, describing it as "mental actions apparently going beyond the scope of a 'definite method'".

Hallucination is the word we've adopted to describe AI getting it completely wrong. That word connotes something being dreamed up out of thin air. We can't fathom how AI reached that conclusion. But it's not that unimaginable when you think of the lack of the unrecorded in its knowledge base. We assume a certain level of common sense and intuitiveness in the other humans we interact with. Hence, it surprises us when our interaction with AI doesn't exhibit this. We call that a hallucination, but really it's just a hole; a very large hole.

AI is not as grand of a synthesis as we think.

Why do we believe AI?

Have you ever played the game Balderdash6? Everyone is given an obscure word from the dictionary. And then each player makes up a definition for that word. All of these definitions are read and voted on. If you get the most votes for your definition, then you win. The key here is not to know the right definitions to uncommon words, but simply to sound convincing in your made-up definition.

The phraseology that AI uses makes it a phenomenal Balderdash player. It doesn't say, "perhaps" or "hmmm let me think". You are reading well-crafted responses. And the volume it provides in its response also impresses you. Imagine playing Balderdash with all humans and one AI. You are each given one minute to formulate your definition. Imagine hearing five short responses by humans and then one encyclopedia response by the AI, perhaps with supporting charts and figures.

The phrasing AI uses and the volume of its response add credibility.

Critical Thinking

The wealth of information that AI is trained on and synthesizes, combined with how convincingly it presents its responses, set us up to be impressed by AI. And if we're too busy saying, "wow", we most likely will forget to say, "wait". So always start off your interaction with AI by reminding yourself that the AI does have gaping holes in its knowledge base. It can do a lot of great analysis for you, but it can also miss the mark completely. And it's up to you to spot where it wanders. To be the best human contestant in the imitation game, we need to be healthily critical and always ready to say, "wait a minute", "hold on", "that doesn't add up", …

Satya Nadella has long advocated for changing Microsoft's culture from being a population of know-it-alls to being a population of learn-it-alls. This deeply resonates with me (and I'm going to cover this across several posts on uplevel pro later). We should always be on the lookout for learning opportunities, as part of our daily exchanges. In the context of AI exchanges, I think there's a new "-it-all" we need to adopt. We need to be "reason-it-alls", or perhaps "confirm-it-alls". Don't just take AI at its word. Look for holes. Check the references.

Pair Brainstorming

There's individual work. There's teamwork. And then there's pairwise work. Two people partnering on a problem. Each bringing their best, which naturally evokes the best from their partner. Each activating the other. At its best, each contributor is completely engaged. They are all-in.

For every type of brainstorming that I've ever done, pairwise brainstorming has been the most fruitful for me. It's two people riffing off of each other, and as the brainstorming continues, the collective momentum builds. Your collective Energy Flywheel7 can get spinning crazy fast. "What about …?", "Yes, and …", "Then we can …". The biggest challenge becomes making sure that your whiteboard scribbles are keeping up with the accelerating conversation.

The most attractive thing to me about AI is having an on-demand brainstorming partner. We are equals in this effort. I am not deferring to AI. I am challenging its responses. And the result is inevitably the AI sparking new insights that really propel the conversation forward.

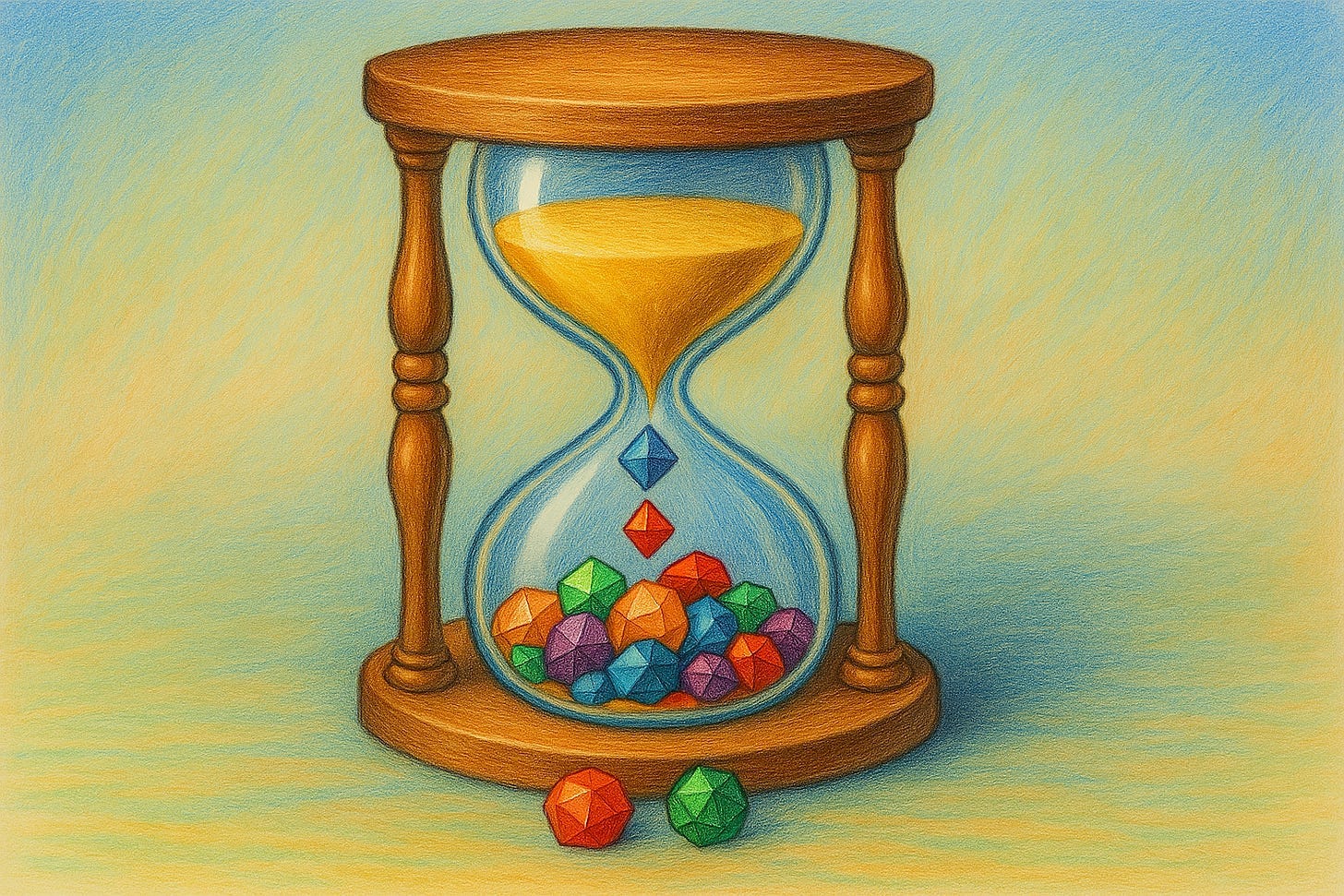

One concrete example of this is how I build images for these posts. When I first started, I was just providing a description of the image I wanted to Copilot, and taking what it gave me. But once I reached a topic that I didn't have a clear picture in my head for how I wanted to pictorially capture it, the exchange with AI became much more of a conversation. I couldn't picture the image I wanted for my Finite Time8 post. I gave Copilot a summary of the article and we started talking about what images could be used to convey that summary. Copilot came up with the hourglass, and then I came up with gems coming out on the bottom.

As I continued this conversation post after post, we discovered a connecting thread of the use of gems in these images to signify the creation of value.

As a partner, AI can help you create the highest value.

Footnotes

The Social Dilemma, which I also touched on in Nuance and Generosity and Control Your Phone, Or Your Phone Will Control You

It’s Time to Dismantle the Technopoly, Cal Newport (The New Yorker), which also talks more about Neil Postman, whom I talked about in the next footnote ⬇️

I think confirming what other *people* say as well is a good word...